Biological Inspiration in Neural Networks

The foundation of artificial neural networks is inspired by the structure and functioning of the human brain. By mimicking the way biological neurons process and transmit information, neural networks can model complex relationships and learn from data efficiently.

1. The Human Brain and Neurons

In our brain, we have neurons, which are like tiny messengers.

Each neuron receives information from other neurons, processes it, and

sends the result to other neurons.

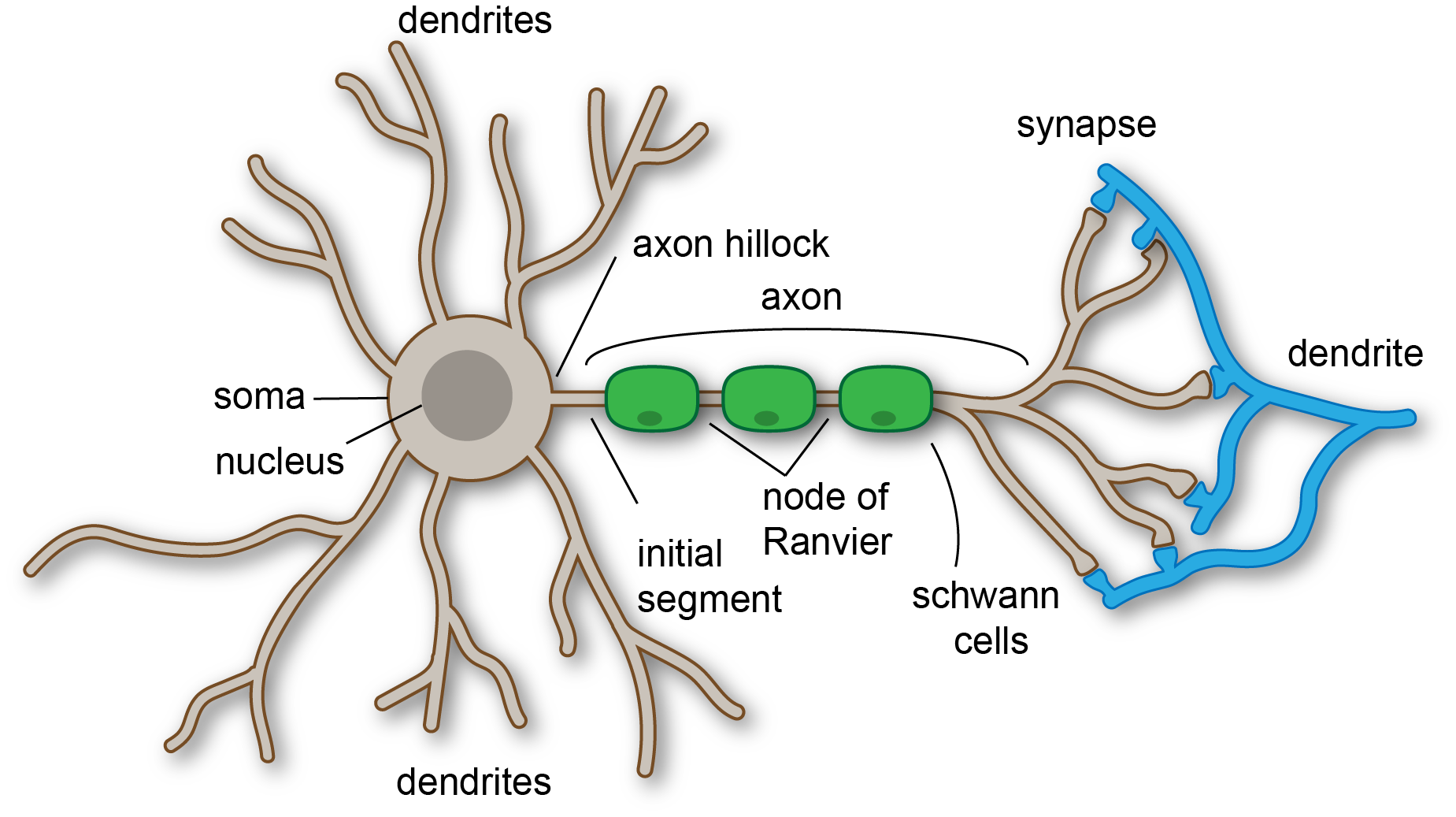

Structure and Function of Biological Neurons

- A biological neuron is a specialized cell in the nervous system responsible for transmitting information throughout the body.

- Each neuron consists of:

- Dendrites: Branch-like structures that receive electrical signals from other neurons.

- Cell body (soma): Processes incoming signals.

- Axon: Long projection that transmits the signal to the next neuron.

- Axon terminals: Ends of the axon that send signals to neighboring neurons.

Synapses and Neurotransmission

- The synapse is the junction between two neurons where communication occurs.

- Signals travel from the axon terminal of one neuron to the dendrite of another.

- This communication is chemical and electrical:

- Electrical signal → triggers release of neurotransmitters

- Neurotransmitters cross the synapse and bind to receptors on the receiving neuron

- The receiving neuron either fires (activates) or does not fire based on the signal strength.

Example:

Imagine you touch a hot cup.

Your sensory neurons send a signal (“it’s hot!”) to your brain,

your brain processes it, and your motor neurons tell your hand to pull away.

2. Artificial Neurons

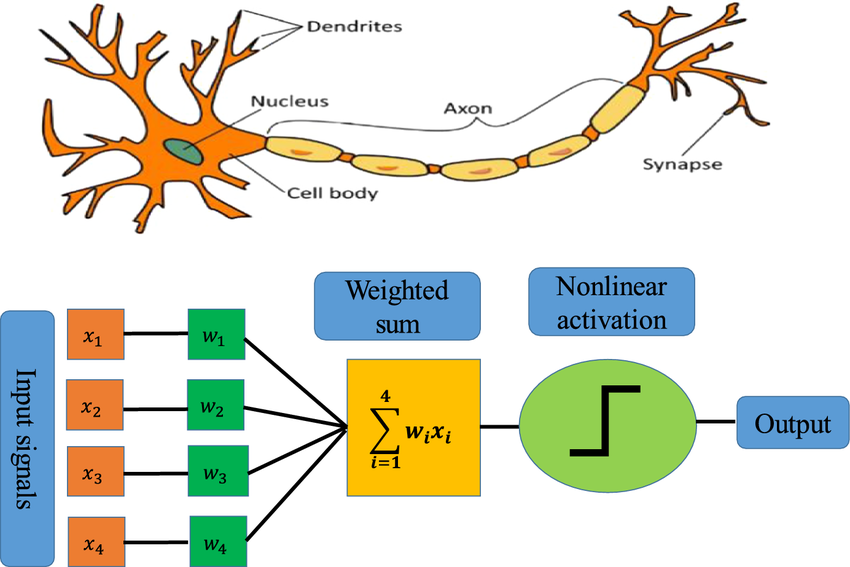

In deep learning, we try to mimic this behavior using artificial neurons.

Artificial neurons (also known as perceptrons) are mathematical functions inspired by biological neurons. They form the building blocks of artificial neural networks (ANNs).

An artificial neuron is a simple mathematical function that: 1. Takes some inputs (like numbers). 2. Applies weights (importance) to each input. 3. Adds them up. 4. Passes the result through an activation function (like deciding whether the message is important enough to send).

Structure of an Artificial Neuron

Each artificial neuron performs a weighted sum of inputs, applies an activation function, and produces an output.

Mathematically:

\[\large y = f\left(\sum w{\scriptstyle i} x{\scriptstyle i} + b\right)\]Where:

- xi: Input features

- wi: Weights applied to inputs

- b: Bias term

- f: Activation function (e.g., sigmoid, ReLU)

- y: Output

Components:

- Inputs: Data fed into the neuron (e.g., pixel values of an image).

- Weights: Indicate the importance of each input.

- Bias: A constant value that helps shift the activation function.

- Summation Function: Computes the weighted sum of inputs.

- Activation Function: Determines whether the neuron should fire (produce an output).

3. Comparison: Biological vs. Artificial Neurons

| Aspect | Biological Neuron | Artificial Neuron |

|---|---|---|

| Basic Unit | Neuron (nerve cell) | Perceptron or node |

| Input | Electrical signals from dendrites | Numeric features or signals |

| Processing | Integration in the cell body | Weighted sum of inputs + bias |

| Output | Signal via axon terminals | Output value after activation function |

| Signal Transmission | Chemical (neurotransmitters) + Electrical | Purely mathematical computation |

| Learning Mechanism | Synaptic plasticity (adjusts synaptic strength) | Weight adjustment via backpropagation |

Summary

- Biological neurons are the basis for thought and learning in the human brain.

- Artificial neurons mimic their structure and behavior mathematically to process data.

- While simpler, artificial neurons and their networks have enabled machines to learn complex tasks such as image recognition, speech processing, and decision making—mirroring aspects of human cognition.

Understanding this analogy helps bridge the gap between neuroscience and artificial intelligence, providing a strong foundation for grasping how neural networks function.